DOT HS 813 544 March 2024

Traffic Records Data Quality

Management Guide:

Update to the Model

Performance Measures for

State Traffic Records Systems

This page intentionally left blank.

DISCLAIMER

This publication is distributed by the U.S. Department of Transportation, National

Highway Traffic Safety Administration, in the interest of information exchange.

The opinions, findings, and conclusions expressed in this publication are those of

the authors and not necessarily those of the Department of Transportation or the

National Highway Traffic Safety Administration. The United States Government

assumes no liability for its contents or use thereof. If trade names, manufacturers’

names, or specific products are mentioned, it is because they are considered

essential to the object of the publication and should not be construed as an

endorsement. The United States Government does not endorse products or

manufacturers.

Suggested APA Format Citation:

Osbourn, C., Ruiz, C., Haney, K., Scopatz, B., Palmateer, C., Bianco, M. (2024, March). Traffic

records data quality management guide: Update to the model performance measures for

state traffic records systems (Report No. DOT HS 813 544).

This page intentionally left blank.

i

Technical Report Documentation Page

Form DOT F 1700.7 (8-72) Reproduction of completed page authorized

1. Report No.

DOT HS 813 544

2. Government Accession No.

3. Recipient's Catalog No.

4. Title and Subtitle

Traffic Records Data Quality Management Guide: Update to the Model

Performance Measures for State Traffic Records Systems

5. Report Date

March 2024

6. Performing Organization Code

7. Author

Osbourn, C., Ruiz, C., Haney, K., Scopatz, B., Palmateer, C., Bianco,

M.

8. Performing Organization Report No.

9. Performing Organization Name and Address

VHB

Venture I

940 Main Campus Drive, Suite 500

Raleigh, NC 27606

10. Work Unit No. (TRAIS)

NSA-221

11. Contract or Grant No.

12. Sponsoring Agency Name and Address

National Highway Traffic Safety Administration

1200 New Jersey Avenue SE

Washington, DC 20590

13. Type of Report and Period Covered

Final Report

14. Sponsoring Agency Code

15. Supplementary Notes

The COR for this report was Tom Bragan.

16. Abstract

The Traffic Records Data Quality Management Guide builds upon the information in the NHTSA 2011 report,

Model Performance Measures for State Traffic Records Systems, by describing data quality performance

measurement in the context of a data quality management program. The performance measurement process

enables stakeholders to quickly identify potential data quality challenges within a traffic records system, track

project progress, and monitor data quality improvements or degradations over defined periods. Establishing

meaningful data quality performance measures is fundamental to that process and is essential to a successful

data quality management program for a State’s traffic records system. NHTSA created this update in response to

a review of State Traffic Records Assessment reports and State Traffic Safety Information System

Improvements grants funding applications. This review found that States struggle with developing and using

meaningful data quality performance measures. To address this challenge, this guide includes information on

data management, stakeholder involvement, and developing and using data quality performance measures.

17. Key Words

performance measure, quality standard, traffic records,

Twelve Pack

18. Distribution Statement

Document is available to the public from the

DOT, National Highway Traffic Safety

Administration, National Center for Statistics

and Analysis, https://crashstats.nhtsa.dot.gov

.

19. Security Classif. (of this report)

Unclassified

20. Security Classif. (of this page)

Unclassified

21. No. of Pages

53

22. Price

ii

This page intentionally left blank.

iii

Table of Contents

Acronyms List ............................................................................................................................... v

Key Terms.................................................................................................................................... vii

Executive Summary ...................................................................................................................... 1

Overview ........................................................................................................................................ 2

Audience ...................................................................................................................................... 3

The Role of Data Quality Management in State Traffic Safety Programs .................................. 4

Introduction to Data Quality Management ................................................................................ 6

Overview ..................................................................................................................................... 7

Stakeholders ................................................................................................................................ 8

TRCC Involvement ................................................................................................................... 10

Consideration of Data Quality Management When Updating Data Systems ........................... 10

Electronic Data Transfer and How to Use Data Quality Management to Support EDT ........... 11

Traffic Records “Twelve Pack”: Six Core Data Systems and Six Data Quality Attributes 13

Six Core Data Systems .............................................................................................................. 13

Data Quality Attributes ............................................................................................................. 16

Performance Measurement ........................................................................................................ 18

Defining Data Quality Performance Measures ......................................................................... 19

Identifying Stakeholders ........................................................................................................... 21

Developing Performance Measures .......................................................................................... 22

Using Performance Measures .................................................................................................... 23

Example Performance Measures ............................................................................................... 25

Model Performance Measures by System ................................................................................. 25

Key Takeaways............................................................................................................................ 35

Resources ..................................................................................................................................... 37

Traffic Records Program Assessment Advisory, 2018 Edition (NHTSA, 2018) ..................... 37

GO Team Technical Assistance Program ................................................................................. 37

Guide to Updating State Crash Record Systems (DOT HS 813 217) ....................................... 37

State Traffic Records Coordinating Committee Strategic Planning Guide (Peach et al., 2019)38

Crash Data Improvement Program (CDIP) Guide (Scopatz et al., 2019) ................................. 38

Roadway Data Improvement Program (RDIP) (Chandler et al., 206) ...................................... 38

Model Minimum Uniform Crash Criteria (NHTSA, 2024) ...................................................... 38

References .................................................................................................................................... 39

iv

List of Tables

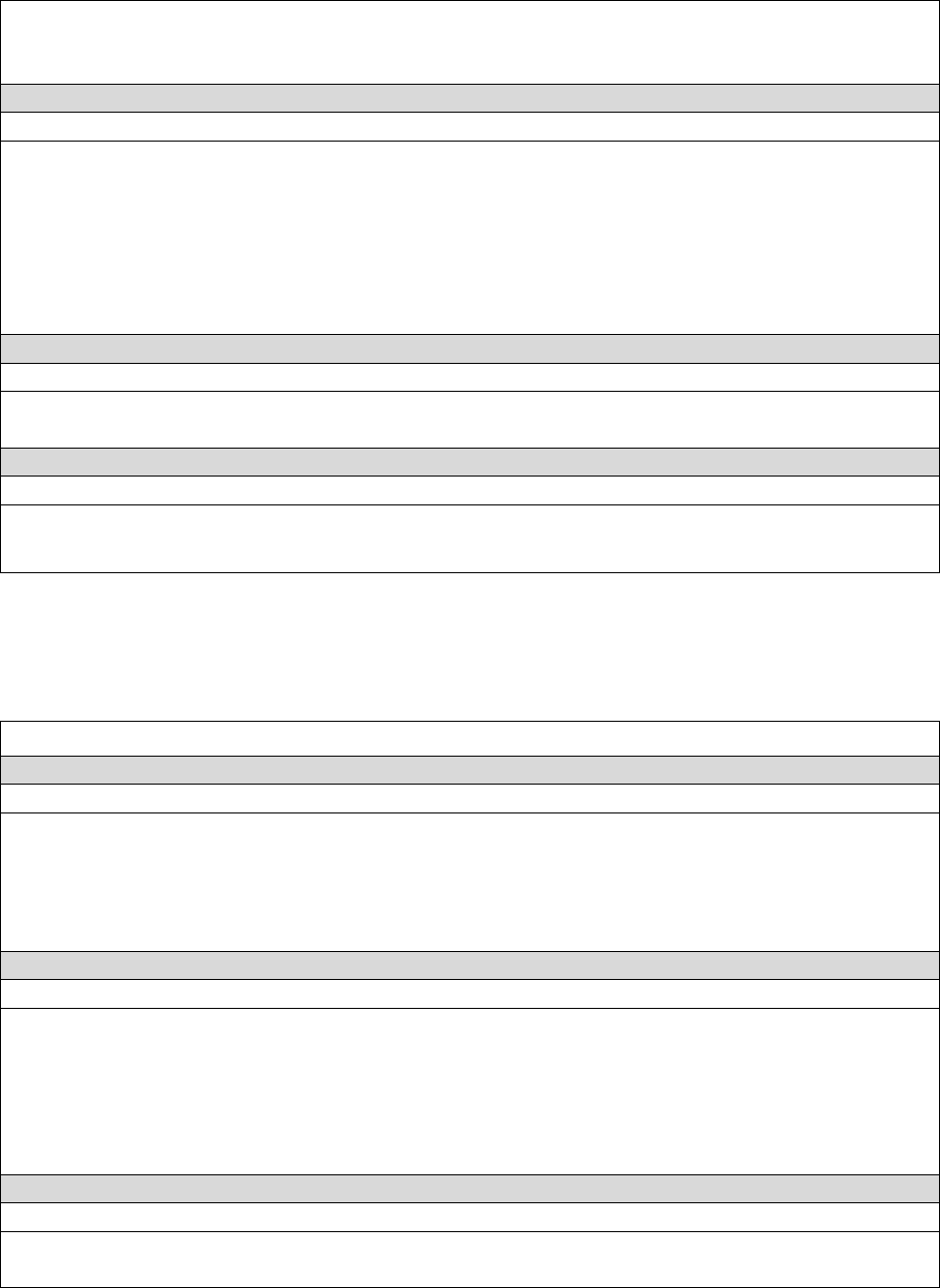

Table 1. Performance Measurement Components ........................................................................ 20

Table 2. Performance Measures Examples Applicable to All Traffic Records System

Databases ....................................................................................................................... 26

Table 3. Crash System Performance Measures Examples ............................................................ 28

Table 4. Driver System Performance Measures Examples ........................................................... 29

Table 5. Vehicle System Performance Measures Examples ......................................................... 30

Table 6. Roadway System Performance Measures Examples ...................................................... 31

Table 7. Citation and Adjudication System Performance Measures Examples ............................ 32

Table 8. Injury Surveillance System Performance Measures Examples ...................................... 33

v

Acronyms List

AADT average annual daily traffic

AAMVA American Association of Motor Vehicle Administrators

ANSI American National Standard Institute

CDIP Crash Data Improvement Program

CDLIS Commercial Driver’s License Information System

CODES Crash Outcome Data Evaluation System

DWI driving while impaired

EDT electronic data transfer

EMS emergency medical services

FARS Fatality Analysis Reporting System

FDE fundamental data element

FHWA Federal Highway Administration

GHSA Governors Highway Safety Association

GIS geographic information system

HPMS Highway Performance Monitoring System

HSIP Highway Safety Improvement Program

HSP Highway Safety Plan

IT information technology

LEA law enforcement agency

LRS linear referencing system

MIDRIS Model Impaired Driving Records Information System

MIRE Model Inventory of Roadway Elements

MMUCC Model Minimum Uniform Crash Criteria

MOU memorandum of understanding

NEMSIS National Emergency Medical Services Information System

PDO property damage only

QA quality assurance

QC quality control

RDIP Roadway Data Improvement Program

SSN Social Security Number

SMART specific, measurable, attainable, relevant, timely or time-based

SSOLV Social Security Online Verification

STRAP State Traffic Records Assessment Program

PDPS Problem Driver Pointer System

SHSP Strategic Highway Safety Plan

TRCC Traffic Records Coordinating Committee

TRSP Traffic Records Strategic Plan

VIN Vehicle Identification Number

vi

This page intentionally left blank.

vii

Key Terms

This document uses terms that have been defined in the National Highway Traffic Safety

Administration’s Traffic Records Assessment Advisory, 2018 Edition (NHTSA, 2018), NHTSA’s

State Traffic Records Coordinating Committee Strategic Planning Guide (Peach et al. 2019), and

the Code of Federal Regulations’ Uniform Procedures for State Highway Safety Grant

Programs, Title 23, Chapter III, Part 1300.

Baseline: A minimum or starting point used for comparisons.

Data attribute: A specific value or selection for a data element (or variable). For example, for

the data element “Type of Intersection,” attributes could include T-Intersection, Y-Intersection,

Circular Intersection, etc.

Data element: Individual variables or fields coded within each record (e.g., Type of

Intersection).

Data file: A dataset or group of records within a data system or database. A data system may

contain a single data file—such as a State’s driver file—or more than one. For example, the

injury surveillance system consists of separate files for the emergency medical service,

emergency department, hospital discharge, trauma registry, and vital records.

Data governance: A set of processes that verifies that important data assets are formally

managed throughout the enterprise.

Data integration: Refers to establishing connections between the six major components of the

traffic records system (crash, vehicle, driver, roadway, citation and adjudication, and injury

surveillance). Each component may have several sub-systems that can be integrated for

analytical purposes. The resulting integrated datasets enable users to conduct analyses and

generate insights impossible to achieve if based solely on the contents of any singular data

system.

Data interface: A seamless, on-demand connectivity and a high degree of interoperability

between systems that support critical business processes and enhances data quality. An interface

refers to the real-time transfer of data between data systems (e.g., auto-populating a crash report

using a bar code reader for a driver license).

Data linkages: The connections established by matching at least one data element from a record

in one file with the corresponding data element or elements in one or more records in another file

or files. Linkages may be further described as interface linkages or integration linkages

depending on the nature and desired outcome of the connection.

Data system: One of the six State traffic records system components—crash, driver, vehicle,

roadway, citation and adjudication, and injury surveillance—that may comprise several

independent databases with one primary data file.

Goal: A high-level statement of what the organization hopes to achieve.

Need: A challenge that the State considers in the Traffic Records Strategic Plan.

Objective: A quantified improvement the Traffic Records Coordinating Committee hopes or

wants to achieve by a specific date through a series of related projects.

viii

Performance measure: A quantitative statement, established through the performance

measurement process, which is used to assess progress toward meeting a defined performance

target. Refer to Table 1. Performance Measurement Components for an example of a

performance measure.

Performance measurement: The process of creating and maintaining performance measures to

assess progress toward meeting a defined performance target. Refer to the Performance

Measurement section for further information.

Performance value: A numeric indicator used for comparing and tracking performance over

time. Refer to Table 1. Performance Measure Components for an example of a performance

value.

Performance target: A quantifiable level of performance or a goal, expressed as a value, to be

achieved within a specified time period. Refer to Table 1. Performance Measure Components for

an example of a performance target.

Problem identification: The data collection and analysis process for identifying areas of the

State, types of crashes, or types of populations (e.g., high-risk populations) that present specific

safety challenges to efforts to improve a specific program area.

Record: All the data stored in a database file for a specific event (e.g., a crash record, a patient

hospital discharge record).

State: The 50 States, Tribal Nations, the District of Columbia, Puerto Rico, and the U.S.

Territories. These are the jurisdictions eligible to receive State Traffic Safety Information System

Improvements grants. In this context, “State” should be understood to include these additional

jurisdictions.

1

Executive Summary

A sustainable data quality management program is fundamental to data that is trustworthy and

dependable for decision-makers. The consequences of making organizational decisions based on

poor quality data can have an undesirable effect on traffic safety. Decisions based on poor data

risk incorrect problem identification or the allocation of resources or funding to areas that have a

lesser impact on improving traffic safety. The proper appropriation of a State’s limited traffic

safety resources is crucial to impacting key safety focus areas such as impaired driving, occupant

protection, distracted driving, and non-motorist safety.

The Traffic Records Data Quality Management Guide builds upon the information in the Model

Performance Measures for State Traffic Records Systems (NHTSA, 2011) by describing data

quality performance measurement in the context of a data quality management program. NHTSA

acknowledges and thanks the expert panel who created the original document for their efforts in

improving data quality.

The performance measurement process enables stakeholders to quickly identify potential data

quality challenges within a traffic records system, track project progress, and monitor data

quality improvements or degradations over defined periods. Establishing meaningful data quality

performance measures is fundamental to that process and is essential to a successful data quality

management program for a state’s traffic records system.

NHTSA created this update in response to a review of State Traffic Records Assessment reports

and State Traffic Safety Information System Improvements grants funding applications. This

review found that States struggle with developing and using meaningful data quality

performance measures. To address this challenge, this guide includes information on data

management, stakeholder involvement, and developing and using data quality performance

measures.

2

This page intentionally left blank.

3

Overview

NHTSA developed the Traffic Records Data Quality Management Guide for State data

collectors, managers, and users to implement methods for improving data quality to support

safety decision-making. This guide represents an update to NHTSA’s Model Performance

Measures for State Traffic Records Systems (2011). The original document was a collaboration

between NHTSA and the Governors Highway Safety Association in which an expert panel of

State traffic safety professionals developed example traffic records data quality performance

measures. While States adopted many of those example measures, State TRSPs and grant

funding applications too often show a misunderstanding of the meaning, purpose, and effective

application of data quality performance measures.

This update extends the scope by providing a context for data quality performance measurement

and placing it as a vital part of the formal, comprehensive data quality management program

described in the Traffic Records Program Assessment Advisory, 2018 edition (NHTSA, 2018).

Audience

Data users, Traffic Records Coordinating Committee members, and other traffic records

stakeholders can use this guide to:

• Identify the roles and responsibilities of their agency personnel in managing data quality;

• Measure data quality over time;

• Identify opportunities for improvement and develop procedures to measure data quality

over time; and

• Improve the understanding of how the traffic records system supports their safety

initiatives.

Data system managers can use this guide to:

• Develop performance measures to evaluate system improvements;

• Articulate to the TRCC the business need for updating components of the State data

system;

• Engage existing TRCC members and other traffic records stakeholders;

• Incorporate new stakeholders into performance management;

• Recognize the necessity of data system edit checks and articulate how to implement them

as part of a data quality control program; and

• Understand and incorporate the needs of the data collectors when developing effective

performance measures.

Data collectors can use this guide to:

• Identify the roles and responsibilities of their agencies’ personnel in managing data

quality;

• Understand why system managers and the TRCC use performance measures to track and

improve the data they collect;

• Identify challenges and opportunities to improve the data collection process (including

opportunities for human factors improvement) to inform the data system managers and

the TRCC; and

• Articulate feedback to data system managers and the TRCC on the quality control efforts

that have been implemented (e.g., new edit checks, procedures, documentation).

4

The Role of Data Quality Management in State Traffic Safety Programs

Quality traffic records data is vital for traffic safety analysis and traffic safety programming.

Maintaining a traffic records system that supports data-driven decision-making is necessary to

identify problems and opportunities for improvement; develop, deploy, and evaluate

countermeasures; and efficiently allocate resources.

Strategic Planning

Quality traffic records data is essential to effective strategic planning. States are encouraged to

implement a strategic planning process that is realistic and supported by their current structure,

authority, and capabilities. Over time, States can refine and improve their strategic planning and

implementation processes to address identified traffic records needs for data quality

improvement. Traffic records stakeholders, such as the State TRCC, should engage in strategic

planning efforts to help identify and address traffic safety data quality issues to move the State

towards improved data and better data quality management.

Data Governance

The advisory describes data governance as the formal management of a State’s data assets.

Governance includes a set of documented processes, policies, and procedures that are critically

important to integrate traffic records data. These policies and procedures address and document

data definitions, content, and management of key traffic records data sources within the State.

Such data standards applied across platforms and systems provide the foundation for data

integration and comprehensive data quality management. Data governance is a comprehensive

approach that includes data quality management as a necessary component.

Data governance varies widely by State and involves a variety of traffic safety stakeholders,

including data stewards, custodians, collectors, users, and other traffic safety stakeholders. The

TRCC is ideally positioned to aid in developing the necessary data governance, access, and

security policies for datasets that include several sources from several agencies. This formal data

governance process can lead to improved data quality and traffic record system documentation,

greater support for business needs, more efficient use of resources, and reduced barriers to

collaboration.

Relationship to Other Plans

Quality traffic records data provides the foundation for key State traffic safety plans. States need

timely, accurate, complete, and uniform traffic records data to identify and prioritize traffic

safety issues, develop appropriate countermeasures, and evaluate their effectiveness. There are

many commonalities among State safety planning efforts including data quality performance

measurement. Performance measurement is a key component of traffic records data quality

management. States are responsible for developing, monitoring, evaluating, and updating State

safety plans using performance measures and targets to track their progress toward data quality

improvement.

One way to reduce duplication of efforts is to incorporate the State TRCC’s strategic planning

and data quality management practices into other State safety plans such as the Strategic

Highway Safety Plan, Highway Safety Plan, Highway Safety Improvement Program, local

agency plans, or State agency efforts. Through agency collaboration on these State safety plans,

5

States are better able to recognize the value of including traffic records data quality in their

planning and encourage broad, multi-agency support for the State’s data quality management

efforts. The State’s traffic records strategic planning process can benefit from stakeholders’

engagement in the development of other safety plans. This can be accomplished by informing

traffic records stakeholders of changes occurring in specific data systems that may have

widespread impacts, expanding the TRSP to include non-§405(c) funded projects, and increasing

visibility of the TRSP with other agencies. These strategic planning efforts enhance the State’s

ability to conduct traffic safety problem identification, select and develop countermeasures, and

measure the effectiveness of said countermeasures.

States that do not have an existing comprehensive, formal data quality management program can

start one by creating traffic records data quality performance measures and targets. The formal

data quality management processes described in the advisory include a role for the TRCC in

reviewing and providing oversight for data quality performance measurements. State TRCCs

identify which Traffic Records Assessment recommendations the State intends to address, which

projects will address each recommendation, and the performance measures used to track

progress. The TRCC’s TRSP performance management should be coordinated with the data

quality performance measures of the State’s SHSP, HSP, and any other traffic records

improvement efforts to prevent States from implementing projects that overlap and to add new

projects as new needs and opportunities are identified.

6

This page intentionally left blank.

7

Introduction to Data Quality Management

Overview

Data quality management is a formal, comprehensive program that includes policies, procedures,

and the people responsible for implementing them. In this guide, data quality improvement has

the specific goal of supporting traffic safety decision-making to save lives, prevent injuries, and

reduce the economic costs of traffic crashes.

As part of data quality management, it is important to distinguish between quality assurance and

quality control. QA comprises the program- and policy-level aspect of data management and is

typically the same for all data a department manages. It sets out the roles and responsibilities,

program components, and expectations for the quality management program. QC comprises the

specific steps and the organization’s reaction to identified data quality problems. It is the list of

data quality initiatives—how quality is measured and the actions that can be taken in response to

specific data quality problems. QA is the manual, and QC is the toolkit. QA and QC together

comprise the organization’s formal, comprehensive data quality management program.

To accomplish this goal, a traffic records data quality management program should include the

following QC components:

• Edit checks and validation rules: These are automated evaluations of values in data

fields against defined ranges or allowed (expected) values, including verifications of

logical agreement among the values. Often called data system business logic, validation

rules test the consistency within a data element and edit checks test the consistency

between two or more data elements. The purpose is to objectively assess the data against

established standards, definitions, and requirements. When implemented as part of a data

quality management program, edit checks and validation rules can minimize data entry

errors by alerting data collectors to problems before they submit their report. They can

also serve as filters at data intake so that reports containing errors can be flagged for

rejection or correction. Edit checks and validation rules must be regularly monitored for

effectiveness and efficiency and modified as necessary. Data collector feedback is

essential to confirm that the edit checks and validation rules are working properly.

• Periodic quality control data analysis processes: This is a periodic analysis of most (or

at least the key or important) fields in the database to compare newly received data to

data from the past. This type of analysis is used to flag unexpected changes in the data so

that analysts and other key personnel can investigate what may have happened and if the

changes indicate a data quality problem or reflect reality. States may choose to repeat the

monthly, quarterly, or annual QC analyses as best fits their quality management program.

8

• Data audit processes: An audit process using a random selection of reports from the

database and expert-level review of the data in each of the cases helps data managers

identify if there are any data collection problems (such as misinterpretations of data

element definitions) that are not being flagged as errors by the current edit checks and

validation rules. Audits can prompt changes to training, guidance, manuals, data

definitions, supervisors’ data reviews, and edit checks.

• Error correction processes: When errors are identified in accepted data (i.e., in the

production version of the database), a formal procedure for correcting those errors should

include a method for logging the correction, providing information to the original data

collector (and agency), and retaining both the original and the corrected data (i.e., the

potential to review the error and the correction). An error log table in the database serves

this purpose. States can set reasonable limits on the types of changes that can be

implemented and for how long after the original submission. Some errors and corrections

are more important than others and impact specific uses. For example, incorrect location

information affects engineering studies and enforcement efforts—the State may wish to

correct these errors whenever they are noticed.

• Data quality performance measures: There are six data quality attributes that NHTSA

recognizes—timeliness, accuracy, completeness, uniformity, integration, and

accessibility—for each of the six core components of a traffic records system. There are

many ways to measure each attribute and there are many databases within the six core

system components. This guide explains performance measurement—how to create

meaningful data quality performance measures that help identify when there is a need to

address a data quality problem and help point to potential solutions.

Stakeholders

Data quality management is the concern of those who collect, manage, and use traffic records

data for decisions impacting traffic safety. The stakeholders in data quality management come

from local, Tribal, State, and Federal Government agencies. They may also be members of the

public, research organizations, and advocacy groups. Important roles and responsibilities in a

data quality management program include:

• Data owners/system administrators/data managers: These are the custodial agencies

and designated positions, given authority (often defined in statute) over data sources. The

data owner may be charged with establishing the official, required contents and format of

data collection forms; implementing and meeting State and Federal standards or

guidelines for collection, maintenance, and access to data; and managing staff and

resources required to maintain the system. The person exercising this authority may or

may not be an information technology professional but is often tasked with overseeing,

managing, and maintaining responsibility for the work of IT staff and IT contractors

supporting the system.

• Data stewards and data custodians: These are often the IT professionals assigned to

maintain and upgrade the system. Where the two roles are separate, data stewards are

tasked with creating datasets, developing policies, and managing high-level

communications about data use and access. Data custodians are IT staff responsible for

9

the technical aspects of data movement, security, storage, and user access. These

positions are often tasked with system documentation responsibilities.

• Data entry specialists, quality assurance QA/QC reviewers, and supervisors: These

are levels of staff trained to handle data intake, post-processing, quality reviews, and

oversight. This type of staff member often reports to the data owner as part of the data

system administration team. As data collection operations move from paper-based to

electronic data collection and data transfer, many States have transitioned these positions

toward data quality review roles with expanded responsibilities focusing especially on

data quality.

• Data analysts and data scientists/statisticians: These staff may also report to the

system administrator. They develop QA policies and QC analyses to test the quality of

data. They also conduct analyses to support programs and key users. Data analysts may

also help to maintain user documentation for the system and may take on responsibility

for training content development for data collectors. Data scientists and statisticians may

also work on new ways of integrating and analyzing data that will then be used by the

data analysts. Data scientists are often focused on developing tools and automated

systems for others to use.

• Data collectors: Depending on the system, many people may supply records to the

database. This may or may not be their primary job responsibility, and, in most cases,

they do not report to the system administrator, but rather are part of a different office or

agency. For some databases, there are many (sometimes hundreds) agencies with

thousands of data collectors. The data collector role may include trainers and supervisory

personnel in each agency as well.

• Data users: These are stakeholders in the system by virtue of their need for data and

analytic results. Data users have a defined role in data quality management because they

can provide valuable feedback on the data’s suitability for meeting specific needs. Their

feedback can take the form of error notifications (a record contains mistakes or is missing

critical information) and by adding to the discussion of data needs (e.g., data gaps).

Each person may take on more than one role—a data collector in one scenario may be a data user

in another situation. Data managers are often data users as well. Data quality management

requires the expertise of specialized roles such as system developers in IT, database

administrators, field personnel, supervisors, data entry specialists and QC staff, executive

decision-makers, engineers, planners, statisticians, research scientists, and more.

The work of data quality management is task-oriented but requires planning, oversight, and

cooperation. To be effective, data quality management should involve collaboration among

stakeholders, preferably within a formal data quality management program or data governance

framework. This can be established for individual systems, at the agency level, or statewide,

depending on the capabilities and maturity level of the programs. This guide describes managing

data statewide or agencywide, but the principles of data quality management apply to individual

systems. The same data quality management structures, policies, and procedures can work for all

traffic records systems or a single system.

10

TRCC Involvement

The State TRCC may be able to facilitate the multi-agency stakeholder engagement that data

quality management requires, especially to meet the need for oversight and collaboration.

Ideally, TRCCs meet regularly throughout the year and are tasked with improving State traffic

records data. They are charged with creating the State TRSP that includes projects proposed by

stakeholders to address problems and take advantage of opportunities for data improvement and

increased efficiency. Their inputs include the data quality management programs, custodial

agencies, and various stakeholders expressing data needs and ideas for improvement. The TRCC

can take on several important roles in data quality management, including:

• Identify stakeholders: The TRCC is a good source of stakeholders for most of the traffic

records system components and the roles and responsibilities defined for the data quality

management program. One possibility is that data quality management can become a

working group within the TRCC.

• Review system edit checks and validation rules: The process of reviewing data edit

checks and validation rules often involves a small group of experts with detailed

knowledge from several perspectives (collectors, managers, and users). The TRCC can

include these people or recruit them specifically for review efforts.

• Define performance measures: Through the performance measurement process, the

TRCC can help data managers define data quality performance measures if agencies need

assistance. This can happen through collaboration among agencies that have successfully

implemented data quality programs and share that knowledge with partners in the TRCC

setting. The TRCC can also take the lead in creating a formal, comprehensive data quality

management program that all agencies can participate in together. Part of that effort

would be the development of data quality performance measures for each of the systems.

• Monitor data quality performance measures and set goals: The data quality

performance measurement effort will include comparisons against baseline performance

and target setting. The TRCC can help agencies establish the goals for data quality

performance and monitor the achievement of the projects aimed at improving traffic

records quality by including periodic reports in the TRCC meetings.

• Work with or foster data governance efforts: The TRCC can contribute to data

governance efforts within agencies or at the statewide level by sharing its knowledge of

data quality management for the State’s traffic records system. The formal policies,

procedures, roles, and responsibilities in data quality management are all like those

needed for data governance. The two processes can work well together and the TRCC

can share its progress with the data governance group or borrow from what that group has

already accomplished.

Consideration of Data Quality Management When Updating Data Systems

Data quality management programs involve several activities that can be supported with

automated tools or database records. These include analyses that are run periodically (e.g., trend

11

analyses and comparisons to prior years’ data), reviews and updates to system edit checks or

validation rules, and creation of error logs when data is corrected. While it is possible to add

features to an existing system, the cost can be quite high and the timeline for execution may be

long. The costs and timing are barriers if the work is not considered part of a critical

departmental priority or if the requested change will not generate revenue. When departments are

upgrading their systems, however, the opportunity arises to include functionality that will

support data quality management as part of the new system specification. At that time, the

additional cost for adding a feature such as flexible analytic output, edit check specifications

controlled by the agency’s management, or a separate data table to track error corrections may be

marginal.

It is important to have systems designed so that users can specify their own analyses and save

those specifications for reuse and modification. Similarly, suppose the system design includes an

edit check and validation rule tool. In that case, that tool should allow the system’s managers to

write new edits, save them, turn them on and off, and specify what happens when a rule is

violated. Any new edit checks created should be thoroughly vetted in a test environment before

implementation in a production environment to determine that there is no adverse impact to the

system. Also, for the suggested error log data table, the system should track all changes to the

database by who made the change, when they made it, and what the change was so that the data

can always be recovered to the immediately preceding value if desired. These features will give a

State more control over future changes to the system and avoid the costly and time-consuming

need to consult with contractors or IT staff to perform minimal functions such as modifying an

edit check or creating a new analysis. The tools for performing these tasks should be simple to

use and accessible to authorized users based on management’s approval.

Electronic Data Transfer and How to Use Data Quality Management to Support

EDT

The EDT protocol is NHTSA’s automated transfer of State motor vehicle crash and injury data

from State data repositories. NHTSA uses EDT to advance near real-time data collection and

transfer, enable more timely decision-making, reduce the burden of data collection, improve data

quality, and make data available sooner. Ultimately this supports the Fatality Analysis Reporting

System and Crash Report Sampling System programs, which align to many MMUCC data

elements. The closer a State aligns to MMUCC and other national data standards, the easier it is

to share data and there is less work to do in translating between the State data elements and

NHTSA’s data elements. States can request additional information about EDT by contacting

their NHTSA Regional Offices.

12

This page intentionally left blank.

13

Traffic Records “Twelve Pack”: Six Core Data Systems and Six Data

Quality Attributes

Six Core Data Systems

Six core data systems make up a comprehensive traffic records program. These data systems are

briefly described below. More detailed information about the core data systems and their

components can be found in the advisory.

Crash

The crash data system is the repository for law enforcement reported motor vehicle traffic crash

reports. The crash system collects and maintains critical crash data used to develop and

implement data-driven traffic safety countermeasures. At a minimum, crash data includes

information about who was involved in the crash, what types of vehicles were involved, when

and where the crash occurred, how the sequence of events of the crash played out, and any

related factors. See the Model Minimum Uniform Crash Criteria (NHTSA, 2024) for more

information on data collected for the crash system.

Interfacing the crash data system with other traffic records data systems can increase the ease

and efficiency of data collection, while creating potential for robust data analysis efforts. Data

analysis provides information about driver behavior, environmental factors, injury severity, and

medical outcomes of crash-related injuries.

Refer to Table 3. Crash System Performance Measures Examples for examples of crash system

performance measures.

Driver

The driver system contains personal information about motor vehicle operators. Each licensed

driver should have one identity, one license, and one driver history record. The driver history

record includes prior traffic crashes, sanctions, convictions, administrative actions, license

classes, and endorsements, license issuance and expiration dates, and any restrictions. The driver

system may contain information on unlicensed drivers and out-of-State drivers who were

involved in in-State crashes or received in-State traffic sanctions. Custodial responsibility usually

lies with the State’s Department or Division of Motor Vehicles. See the American Association of

Motor Vehicle Administrators Data Element Dictionary for Traffic Records Systems (2020) for

more information on data collected for the driver system.

Data from the driver system can be used by law enforcement officers to populate data on crash

and incident reports, complete citation forms, and determine appropriate sanctions or charges.

Data analysis of driver system data can identify drivers with a pattern of high-risk behaviors who

pose a safety risk to other road users. Driver data is useful as a source of demographic data and

in effectiveness evaluations for driver training, information campaigns, and various behavioral

countermeasures focusing on specific driver types or violations. Linking driver data to the

citation and adjudication system can be particularly useful because doing so supports improved

updates to records on people and can help States keep better track of repeat violators who may be

subject to enhanced charges.

14

Refer to Table 4. Driver System Performance Measures Examples for examples of driver system

performance measures.

Vehicle

The vehicle registration system is the repository for information on titled and registered vehicles

within the State. Usually, a State’s Department or Division of Motor Vehicles has custodial

responsibility for this data system. The vehicle system may include information on vehicles

registered out-of-State but involved in a crash or traffic violation in-State. Data housed in the

vehicle system includes vehicle specifications (e.g., make, model), owner/registrant information,

motor carrier information, vehicle history (including recalls), and identifies financial

responsibility. See the American Association of Motor Vehicle Administrators Data Element

Dictionary for Traffic Records Systems (2020) for more information on data collected for the

vehicle system.

Law enforcement officers may use vehicle registration data to populate data on crash, incident,

and citation reports. The Vehicle Identification Number can be used to identify patterns of

specific vehicle involvement in crashes and other traffic-related incidents and to identify stolen

vehicles more readily during traffic stops. When driver and owner records are expected to match

with respect to other key data elements (e.g., address changes), linking the two can help with

data validation and update processes.

Refer to Table 5. Vehicle System Performance Measures Examples for examples of vehicle

system performance measures.

Roadway

The roadway system contains data about the characteristics, conditions, operation, and ownership

of roadways within the State. Ideally, this system includes information on Federal, State, Tribal,

and local roadways. The custodial agency for the roadway system is generally each State’s

Department of Transportation. Examples of data collected in the roadway system include road

classification, condition, number of travel lanes, shoulder type and width, traffic control devices,

pavement type, and average annual daily traffic. See the Federal Highway Administration’s

Model Inventory of Roadway Elements – MIRE 2.0 (Lefler et al., 2017) for more information on

the data collected for the roadway system.

Analysis of roadway data supports network screening and traffic safety countermeasure

development. Integrating roadway and crash or citation data allows for a better understanding of

the circumstances of crashes and other traffic incidents. This combined data analysis can identify

locations for safety improvements and assists project prioritization to allocate funds to high-risk

locations. Creating an interface between the crash report and the roadway system can allow

officers to select the crash location on the State’s linear reference system to populate roadway

data onto the crash report. The LRS and traffic volume data are useful in law enforcement

planning and EMS response. Agencies that prepare community-level safety analyses can use the

roadway spatial data to identify areas of high risk and compare those with information about

their areas that have a high concentration of night life, locations of schools and universities, lack

of sidewalks, density of vulnerable road users, or other factors that may contribute to the risk of

injuries or fatalities. Additional data may include things like prevailing speeds, distance or time

to nearest level 1 or level 2 trauma center, average EMS response times, and other information

not usually captured in a linkage to crash reports alone.

15

Refer to Table 6. Roadway System Performance Measures Examples for examples of roadway

system performance measures.

Citation and Adjudication

The citation and adjudication system is made up of component repositories that store traffic

citation, arrest, and final disposition of charge data. Responsibility for the systems is

interdependent on the data-owning agencies, which includes Federal, State, Tribal, and local

agencies. A functional traffic records citation and adjudication system relies on the willingness

of data-owning agencies to share data. Ideally, all relevant traffic records-related citations are

housed in a central, statewide repository to allow for thorough data analysis.

Citation and adjudication data are used by the driver and vehicle systems to maintain accurate

driver history and vehicle records. Citation and adjudication data are also used in national safety

data repositories, such as the Problem Driver Pointer System (PDPS) and the Commercial

Driver’s License Information System (CDLIS). Integrating crash and traffic citation data with

other crime data can identify hot spots or high-risk areas to aid in effective resource allocation.

Analyzing citation and adjudication data can also support traffic safety programs by examining

the effectiveness of law enforcement campaigns. Accurately tracking impaired drivers helps

reduce recidivism. See the NHTSA Model Impaired Driving Records Information System (Greer,

2011) for more information.

Refer to Table 7. Citation and Adjudication System Performance Measures Examples for

examples of citation and adjudication system performance measures.

Injury Surveillance System

The injury surveillance system is a network of data repositories, comprising several component

systems (e.g., EMS, trauma registry, emergency department, hospital discharge, vital statistics,

and rehabilitation). The injury system is responsible for tracking the frequency, severity,

causation, cost, and outcome of injuries. The data contained in this system is representative of

the patient care lifecycle. Custodial responsibility is usually shared among several State and

other agencies. Stakeholders from traffic safety, public health, and law enforcement communities

drive the development of a statewide injury surveillance system. The injury surveillance system

family of records begins with a patient care record from EMS response—see the National

Emergency Medical Services Information System’s EMS Data Standard (NHTSA, 2023) for

more information on the data collected by EMSs. There is also a national standard for trauma

registry data captured once a patient has been seen in an emergency department and coded into a

trauma registry—see the American College of Surgeons (n.d.) National Trauma Data Bank Data

Standard.

Integrating crash and injury data can provide information about the outcome of injuries sustained

in motor vehicle crashes and other traffic related incidents. Injury data analysis supports problem

identification, research into prevention, and the development of traffic safety countermeasures.

Analysis of injury data can inform policy-level decision-making, including changes to

legislation, and the allocation of resources. Although no longer directly sponsored by NHTSA,

the data linkage techniques developed for the Crash Outcome Data Evaluation System are still

used by some States to support data analysis, problem identification, project evaluation, and

programmatic decisions. Injury data can also be linked to traffic and roadway data to help inform

planning, safe communities, and transportation equity efforts.

16

Refer to Table 8. Injury Surveillance System Performance Measures Examples for examples of

injury surveillance system performance measures.

Data Quality Attributes

A comprehensive traffic records program tracks the six core data quality attributes for each of

the six core traffic records data systems. These attributes are briefly described below and are

used as the basis for measuring data quality in the next section.

Timeliness

Timeliness reflects a measure of the length of time between the event and the entry of data into

the appropriate data system. Timeliness could also measure the length of time between when the

data is entered into the system and when the data is available for analysis.

Accuracy

Accuracy measures the degree to which the data is error-free. An error occurs not when the data

is missing (see completeness, later in this section), but when the data in the system are incorrect.

Erroneous data can be difficult to detect but edit checks and validation rules for data entry can

help minimize errors and direct the user to a more appropriate response. Verifying data with

external sources is another method for identifying errors. Accurate data is error-free, pass edit

checks and validation rules, and are not duplicated within a single database.

Completeness

A complete database contains records for all relevant events where each record is not missing

data. Missing data does not indicate errors (see accuracy, above), but it does make a database

incomplete. Completeness can be measured internally (e.g., the number of records in a system

that are not missing data element selections) or externally (e.g., the percentage of incidents that

are entered into a system out of all known incidents).

Uniformity

A database is uniform when there is consistency among records and among procedures for data

collection across the State. Uniformity should be measured against an independent data standard,

preferably a national standard if available. Uniformity may assess consistency among data

collection sources (e.g., law enforcement, health care providers, courts, vehicle registration

clerks, etc.) that provide records to the database. Uniformity may also assess how closely a data

system aligns to the applicable national standard such as the MMUCC) and the American

National Standard Manual on Classification of Motor Vehicle Traffic Crashes (ANSI D.16-2017)

for crash data, NEMSIS for EMS data, MIRE for roadway data, and others.

Integration

The ability to link data records from one traffic records data system to records in another of the

six core component data systems presents States with an opportunity to conduct complex data

analysis. This integration between two or more traffic records systems involves matching records

from one system to another by using common or unique identifiers. Rather than measuring

performance in one system only, integration measures the ability of two or more systems to

successfully match their records. Integration can be measured at the database level (e.g., two

traffic records source files are linked, so a State might show a simple performance measure value

of “two”). A more informative measure is the percentage of records that can be matched from

17

two or more data systems. For example, 1,000 people were transported to hospital emergency

departments because of crash-related injuries and 800 were admitted to the hospitals. The

integration of crash data and health data would be measured on the expectation of up to 800

linked in-patient records and up to 1,000 EMS and emergency department records. If 600 in-

patient records are successfully linked with crash records, the measure is 75 percent.

Accessibility

Accessibility is unique among the traffic records data quality attributes. Unlike the first five,

which focus on the overall quality of data in the six core component data systems, accessibility

evaluates the ability of data users to obtain data. Data users and traffic safety stakeholders need

timely access to data that is accurate, complete, and uniform. However, measuring users’ ability

to access the data they need for traffic safety programs and data-driven decision-making can be

difficult.

One method for measuring accessibility is to consider it in terms of customer satisfaction.

Legitimate data users must be able to request and obtain the data necessary for their business

needs. Are these data users able to access the relevant data? Are they satisfied with the data they

can access and the process in place for obtaining the data? To answer these questions and

measure a system’s accessibility, States can develop and administer a survey to data users. States

should first identify who the principal users of the data are. Next, query the principal users to

assess both their ability to access the data and their level of satisfaction with the data (e.g., in

terms of timeliness, accuracy, completeness, uniformity). Finally, States should document the

methodology and responses from their principal users.

Another method for measuring accessibility is to measure the number of unique users who access

the data system over a set period. This is possible by tracking internal system data, such as

logins, data queries, or data extracts. With a nationwide increase in electronic data reporting,

many States are offering public data dashboards. These dashboards allow access to traffic safety

stakeholders who are not principal data users and would not historically have access to the data.

Tracking the number of website or dashboard hits, or the number of data queries run, can also

inform States of data accessibility.

18

This page intentionally left blank.

19

Performance Measurement

Performance measures are intended for use by traffic safety stakeholders to develop, implement,

and monitor traffic records data systems with a focus on data quality. They can be used in

strategic plans, data improvement grant processes, and data governance efforts. They should be

used to quantify system improvements and for ongoing monitoring of the quality of traffic safety

data collected by a state’s traffic records system. The information outlined in this document is

expected to help stakeholders quantify and evaluate quality improvements to their traffic records

systems.

The data quality performance measure examples in this guide serve to assist States in developing

their own measures to monitor and improve the quality of traffic records data. Performance

measures gauge the performance of a specific data system in one of the six core traffic records

component systems. The measures should be based on State needs and can be used to track

progress in a TRSP, HSP, SHSP, or any system-specific planning efforts. Performance measures

help accurately measure the impact of system improvement or enhancements, assess the quality

of data being captured, create transparency, and answer important questions about overall system

performance. Performance targets can be used to measure past, current, and future performance.

They can help identify both weaknesses and strengths.

Performance measures can originate from several sources. One important origin is the State’s QC

data analyses resulting in problem identification. When data quality problems are identified, one

QC step may be to establish a performance measure that quantifies the problem and helps the

State monitor improvements toward an established goal over time. Another frequent source of

performance measures is data improvement projects, especially those funded with traffic records

grants and highlighted in the State’s traffic records strategic plan. A well-constructed project

description will include data quality performance measures with a baseline value and predicted

improvements over the life of the project. States and agencies can choose which of their data

quality performance measures are included in strategic plans, reports to the TRCC or NHTSA,

and which are kept primarily for internal tracking by the data custodians and data managers.

Different measures can serve different purposes. The important common feature is that all the

performance measures are quantifiable and that they each serve a specified need for the State.

Defining Data Quality Performance Measures

A complete data quality performance measure arises from an identified need, works toward a

goal, identifies an objective, contains a baseline measure, and defines a performance target

within a set timeframe. Table 1 shows an example of the components of performance

measurement and how these parts fit together.

In this example, a State identified the need for a timeliness performance measure for crash report

submissions. The State noted the data system and data quality attribute being measured and set

the goal of increasing timeliness. Next, the State created a time-bound objective (the next 5

years) over which the State would achieve the timeliness improvement. The State defined the

performance measure using a quantitative statement to achieve the desired 10-day submission

performance target —move from the baseline of 65 percent (year 0—2023) of crash reports

entered the Crash Data System within 10 days after the crash to the performance target of 95

percent by year 5 (2028). Further, the State set annual performance values for each year of the

defined time period to compare and track performance. The table shows planned and actual

20

performance, providing evidence that the State has tracked performance and that it has achieved

its crash report submission timeliness goal.

Table 1. Performance Measurement Components

Need

Establish a timeliness performance measure

related to crash report (electronic and paper)

submission.

Data System

Crash

Data Quality

Timeliness

Goal

Increase the timeliness of traffic records data.

Objective

Improve the timeliness of the Crash Records

Data System in the next 5 years.

Performance Measure

Increase the percentage of crash reports

entered in the Crash Records Data System

within 10 days after the crash, from 65% in

2023 to 95% in 2028.

Baseline (e.g., 2023)

65%

Performance Target

95%

Performance Values

Planned

Actual

Year 1 (e.g., 2024)

70%

72%

Year 2 (e.g., 2025)

75%

75%

Year 3 (e.g., 2026)

80%

84%

Year 4 (e.g., 2027)

88%

90%

Year 5 (e.g., 2028)

95%

95%

Performance measures should:

• Be quantifiable—able to be expressed or measured as a quantity (amount or number).

• Be measurable—able to estimate or assess the extent, quality, value, or effect of

something.

• Be meaningful and useful to States.

• Contain a baseline (current/historic value).

• Reference a target.

21

When the performance measure components are put together to form a statement, it should read

something like this:

• Increase the percentage of crash reports entered in the crash records data system within

10 days after the crash, from 65 percent in 2023 to 95 percent in 2028.

Over the life of the performance measure, the State can track actual progress against the target

measures to see if they are on course. For example, in year 2 the State finds that they are only at

70 percent instead of the target measure of 75 percent. The State now knows how to adjust their

efforts to get back on track to reach the year 3 target measure of 80 percent. Setting and routinely

monitoring smaller targets (e.g., quarterly) can add greater safety nets to help keep projects from

straying off course.

Identifying Stakeholders

Defining Stakeholders

When creating performance measures for traffic records systems, first identify stakeholders and

system owners. Below is a list of questions agencies can use to identify stakeholders.

• Who is responsible for oversight of the system?

• Who can affect changes, improvements, or enhancements to the system?

• What people have a vested interest in how the system performs and the quality of data it

collects?

• Whose work is impacted by the quality of data collected by the system?

• Who can help determine if there are mechanisms in place to collect data for the

performance measures selected?

• Who can help evaluate if the performance measures established have meaning,

usefulness, and relevance to stakeholders and system owners?

Various stakeholders and system owners can develop meaningful and realistic performance

measures. Stakeholders of traffic records systems may include system users, data collectors, data

users, system owners and managers, TRCC members, and others. Identify people from each of

these groups for each respective traffic records system before beginning to draft performance

measures. Ideally people networking and building connections with traffic records professionals

across State agencies from all traffic records systems is vital to a successful TRCC as well as

establishing useful and meaningful performance measures for each system.

Addressing Challenges

States may not always have someone with the expertise to draft appropriate performance

measures for each respective system. The State Highway Safety Office has program management

authority over NHTSA-funded traffic records grants and should know how performance

measures are created and reported on a statewide level. Often this task falls to the TRCC, or the

person(s) tasked with updating the State’s TRSP. Also, lack of TRCC involvement from data

system representatives can make it harder to develop performance measures representative of the

core component systems. Lack of contributions to TRSP updates can also make identifying

performance measures challenging. States are encouraged to establish contacts, network, build,

and maintain relationships with traffic records system owners, database managers and users, and

other stakeholders. States may want to focus on the areas with the greatest potential for

improving data quality and enhancing the collection of traffic safety data.

22

The TRCC’s Role in Performance Measurement

Performance measures are designed to provide important actionable information to data system

managers. The development, implementation, and maintenance of a TRSP, as well as including

each core component area in the plan is a key responsibility of the TRCC. Ideally, each traffic

records system would have meaningful and useful performance measures including baselines,

goals, and targets. It is important when establishing performance measures for a data system that

the measures selected are meaningful to system owners and stakeholders.

Routine monitoring of performance measures and reporting on progress toward performance

targets at TRCC meetings is important to an effective strategic plan. The tracking of problems,

successes, and solutions benefit the entire traffic safety community. This helps establish that the

performance measures in place continue to be useful and can also help to identify when it may be

time to review, update, or revise existing performance targets as traffic records systems evolve

through the years.

Developing Performance Measures

Well-crafted performance measures with meaningful goals, baselines, and targets are crucial to

monitoring a system’s progress over time and provide a mechanism to evaluate system

improvements or enhancements. States might consider asking the following questions during the

development of each performance measure.

• Is it meaningful?

• Is it relevant?

• Is it useful or does it have value to data collectors, data users, or system owners?

• Is it realistic (i.e., is it achievable)?

• Do changes in the measure reflect changes in the data’s quality?

• Is it reliable? Will stakeholders trust what the measure tells them?

• Is it helpful to inform decision-making when evaluating system improvements or

enhancements?

• Is the quality of data collected improving?

SMART

When establishing performance measures for a traffic records system, incorporate the following

SMART principles.

• Specific: Measures are well-defined, appropriate, understandable, and target the area of

improvement.

• Measurable: Measures are quantifiable and detect changes over time using data that are

readily available at reasonable cost.

• Attainable: Measures are realistic, achievable, and reasonable.

• Relevant: Measures are pertinent to the traffic records system, have meaning and

usefulness to a system’s owners, managers, and users and align with the values, long-

term goals, and objectives for the system.

• Timely or Time-Based: Measures have a defined period during which improvement will

be measured or quantified; from a beginning date to an end date that relates back to the

goals and objectives.

23

Steps to Create Measures

The steps to creating quality performance measures:

1. Engage stakeholders: Identify and seek input from those most affected by the data

quality issues (collectors, managers, and users) to be addressed.

2. Identify the problem: Describe the data quality issue(s) with as much precision as

possible using available data.

3. Establish the baseline: Use historical or most-recent-year data as the baseline (starting

point) against which to plan and assess improvements.

4. Set a goal: Establish an ultimate quantitative value of the performance measure to

achieve.

5. Define the time period: Establish the time range over which the goal will be achieved.

6. Plan the action steps: Develop an action plan for achieving the goal over the established

time period.

7. Set targets: Set numeric targets throughout the identified time period (e.g., quarterly,

yearly) of the action plan.

8. Measure progress: Compare the actual performance values and the planned performance

values periodically throughout the identified time period, and report at least annually.

9. Monitor and adjust: Judge the continued validity and utility of the performance

measure, goals, targets, time periods, and action plans. Update or adjust as needed.

10. Evaluate: Assess the actual improvements in data quality against the plan.

Using Performance Measures

Establishing performance measures for each traffic records data system allows States to

continually seek improvement in data quality. By adopting SMART performance measures,

States can monitor the quality of collected data and manage regressions in data quality. Decision-

makers rely on data that are trustworthy, reliable, and accurate to inform their decisions and

efficiently allocate resources. States use traffic records data to develop and implement

countermeasures to increase traffic safety, save lives, and reduce injuries on roadways.

When creating performance measures, define baseline values, performance targets, and goals

from which to measure and evaluate progress. It is also important to decide on a process for

regular monitoring of the performance measure, whether that be on a monthly, quarterly, bi-

annual, or annual basis. Without baselines, targets, goals, and regular monitoring, performance

measures are not useful. States can measure progress and regress by comparing the current

system status to the baseline. Establish a standard schedule for monitoring performance

measures, either through regular reporting to stakeholders at quarterly TRCC meetings, or along

some other consistent and relevant time interval. Performance measures that are not regularly

monitored may be forgotten and States may miss out on important information that could better

inform decision-making. Tracking performance measures will help States accurately assess

situations and make the necessary adjustments to get programs back on track when issues arise.

The scope of performance measures is variable and can change over time. Some measures assess

performance at a statewide or systemwide level, while others assess the performance of specific

jurisdictions, agencies, or organizations. States may be tempted to only focus on the system, but

tailoring performance measures to local groups can provide valuable insight to data collectors

and users at the local level. Local data collectors and users can assist with identifying and

addressing data quality trends that may be applicable statewide. For example, States can create a

24

performance measure for the data quality attribute Timeliness that assesses the time between a

crash incident occurring and when the corresponding crash report is submitted to the State’s

crash database. Track this statewide but applying this performance measure to local law

enforcement agencies can highlight areas that fall below the State’s average. Working with local

agencies to improve their reporting capabilities improves the quality of the data throughout the

system. States can also use performance measures to assess individual performance by county

sheriff offices, city police departments, driver license service centers, judicial districts, court

systems, court or county clerks, hospitals, trauma centers, or others. Applying performance

measures to the local level can also help States identify gaps in reporting. By only tracking the

number of crash reports submitted statewide, States may miss if an agency, city, or county slows

down or stops submitting crash reports. States can identify and correct data quality anomalies by

routine monitoring of performance at the local level.

For example, in the process of monitoring report submissions each month or quarter, a State

identifies that a certain department has ceased sending reports to the repository, or their report

submission volume has decreased by an abnormal percentage for a given time period. This

enables system managers to take corrective action to certify that system data remains complete,

timely, and accurate. The information gained from monitoring submissions at the agency level in

this scenario informs the system manager and allows them to reach out to the relevant

department and troubleshoot any problems to facilitate the restoration of processes that might

have been interrupted.

States can use performance measures in many ways. Performance measures can be used to

monitor an overall system’s data quality, but also to see fluctuations or changes in reporting from

different jurisdictions, agencies, or geographic areas over time. Performance measures can help

States focus on specific user groups who may need additional training or assistance to verify that

their reporting is timely, reliable, and accurate.

Retiring Measures

Traffic records systems evolve over time. The quality of data collected by a system also changes

as part of that evolution. Performance measures that were established while a system was in its

infancy, or immediately after rollout, may not have the same merit years later. For example,

there are many performance measures in place across various States that measure percentage or

volume of electronic reporting or paper-based/manual reporting to the statewide system. If a

system is still transitioning users over to electronic reporting, that measure might still be relevant

and meaningful. But for States where their respective system has achieved full electronic

reporting, or perhaps gets to the point where most records are received electronically, those

original measures may no longer be relevant. Performance measures must evolve with their

respective traffic records systems.

Agencies should regularly ask themselves, is this measure still a good performance measure?

• Is it still meaningful?

• Does it still have relevance?

• Does it still provide actionable information to help drive decisions?